Privacy-Compliant AI for Mental Health Documentation: Best Practices & Tool Guide

AI mental health tools help therapists document sessions faster while keeping patient data safe. This guide covers HIPAA compliance, privacy regulations, and how to choose the best AI for mental health notes. Learn key features of secure platforms and implementation strategies that protect confidentiality without sacrificing efficiency.

AI in mental health is growing fast. It helps therapists take notes, track progress, and even suggest treatments. Yet, keeping patient data safe is key. Privacy-compliant mental health AI must follow rules like HIPAA and GDPR. This guide shows how to choose and document tools that protect confidentiality.

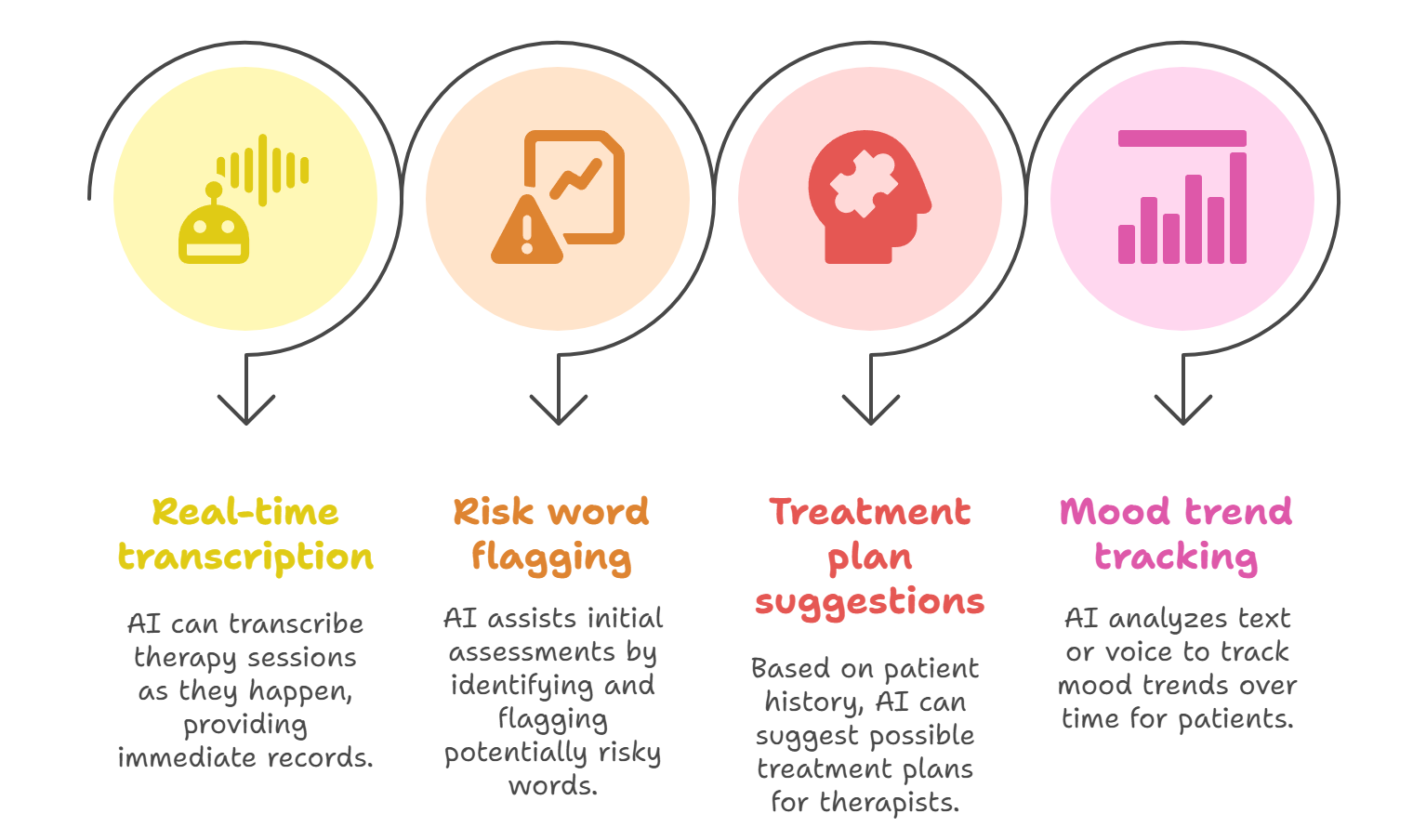

What Is AI Used for in Mental Health?

AI technology is changing mental health care. It supports therapists from first screening to long-term tracking.

Core Applications of Mental Health AI

AI tools can:

- Transcribe therapy sessions in real time

- Help with initial assessments by flagging risk words

- Suggest treatment plans based on patient history

- Track mood trends via text or voice analysis

Documentation-Specific AI Functions

When it comes to notes, AI can:

- Automate SOAP, DAP, and BIRP note formats

- Integrate directly with EHRs for seamless workflows

- Convert speech to text with over 95% accuracy

Privacy Regulations Impacting AI Mental Health Tools

Understanding compliance rules is crucial before using any AI solution. Mental health data needs extra protection under many laws.

HIPAA Requirements for AI Mental Health Tools

HIPAA covers all patient health data, including AI-generated notes. Key rules include:

- All data must be encrypted during storage and transfer

- Only authorized staff can access patient records

- AI vendors need signed Business Associate Agreements (BAAs)

Healthcare organizations paid $4.18 million in HIPAA fines in 2023, double the amount from 2022. Many violations happened when practices used non-compliant AI tools.

GDPR Compliance for Global Practices

European practices face stricter rules under GDPR:

- Patients can request all their data or ask for deletion

- Data cannot leave the EU without proper safeguards

- AI decisions must be explainable to patients

Only 45.5% of mental health apps responded properly to GDPR data requests in recent studies.

State-Level Privacy Laws

California's CCPA and similar state laws add more requirements. These laws give patients more control over their personal data, including therapy notes.

For detailed compliance guidance, check out The Ins and Outs of HIPAA Compliant Note Taking Apps.

Key Features of Privacy-Compliant AI Documentation Platforms

Not all AI mental health tools protect privacy equally. Here are the must-have features that separate secure platforms from basic transcription services.

Data Security Infrastructure

Strong AI platforms use:

- End-to-end encryption that scrambles data from device to server

- Zero-trust systems that verify every access request

- Cloud storage in specific geographic regions for compliance

User Access and Authentication

Secure systems require:

- Multi-factor authentication (password plus phone code)

- Different access levels for doctors, nurses, and admin staff

- Automatic logout after periods of inactivity

Compliance Monitoring and Reporting

The best platforms automatically:

- Check for compliance violations in real-time

- Create detailed logs of who accessed what data

- Alert administrators to potential security issues

Currently, 67% of healthcare workers think AI tools will help solve burdens associated with prior authorization.

Learn more about security features in AI Medical Scribe Security: Complete HIPAA Compliance Guide for Healthcare.

How to Document Mental Health with AI Tools

Using AI for documentation requires careful planning. This structured approach balances efficiency with clinical accuracy and privacy protection.

Pre-Implementation Setup

Before starting:

- Train all staff on new AI workflows and privacy rules

- Plan how the AI will connect with your current EHR system

- Update practice privacy policies to mention AI use

Session Documentation Workflow

During sessions:

- Start AI transcription with clear patient consent

- Review and edit AI-generated notes immediately after sessions

- Check that all clinical details are accurate before saving

AI-optimized patient records can reduce EHR review time by 18% compared to manual documentation.

Data Management and Storage

For ongoing data protection:

- Set clear rules for how long to keep AI-generated notes

- Create secure backup systems with encryption

- Only collect the minimum data needed for treatment

For note format guidance, see SOAP vs DAP Notes: A Therapist's Guide to Choosing the Right Documentation Format.

What Is the Best AI for Mental Health Notes?

Choosing the right AI platform means evaluating features, compliance standards, and how well it works with your practice.

Evaluation Criteria for AI Mental Health Tools

Consider these factors:

- Accuracy: Look for platforms with 95%+ transcription accuracy

- Compliance: Ensure HIPAA and GDPR certifications

- Integration: Check compatibility with your EHR system

- Cost: Compare total costs including setup and training

Comparative Analysis Framework

HealOS stands out because it was built specifically for mental health practices. It includes templates for SOAP, DAP, and BIRP notes that therapists actually use.

Privacy Policy Essentials for AI Mental Health Software

A strong privacy policy builds trust and meets legal requirements when using AI documentation systems.

Required Privacy Policy Components

Your policy must explain:

- What patient data the AI collects and why

- How long data is stored and where

- Who can access the information and under what conditions

- How patients can request changes or deletion

Patient Consent Management

Effective consent requires:

- Clear explanations of AI use in simple language

- Easy ways for patients to opt out of AI documentation

- Written records of all consent decisions

Remember that patients have the right to refuse AI documentation while still receiving care.

GDPR-Compliant Mental Health Chatbots

European practices and global organizations serving EU clients face extra complexity when using AI chatbots for mental health support.

GDPR-Specific Requirements for AI Chatbots

EU-compliant chatbots must:

- Have a clear legal reason for processing personal data

- Allow patients to download all their data in standard formats

- Explain any automated decisions the AI makes about treatment

Technical Implementation Strategies

To meet GDPR requirements:

- Store EU patient data only on European servers

- Use approved methods for any data transfers outside the EU

- Build automatic deletion features for patient data requests

One successful example is a therapy chatbot that automatically erases personally identifiable information after each session while keeping anonymous clinical insights for research.

Frequently Asked Questions

Here are answers to common questions about privacy-compliant AI documentation systems.

How is AI used in mental health?

AI helps with session transcription, progress tracking, treatment suggestions, and administrative tasks. It can analyze speech patterns and text to identify mood changes or risk factors.

How to document mental health with AI tools?

Start with patient consent, use certified HIPAA-compliant platforms, review all AI-generated notes before saving, and maintain secure data storage practices.

What is the best AI for mental health notes?

The best AI combines high accuracy (95%+), strong privacy protection, mental health specialization, and seamless EHR integration. HealOS leads in these areas.

Is AI mental health documentation HIPAA compliant?

Only when using certified platforms with proper Business Associate Agreements, encryption, and access controls. Many basic AI tools are not HIPAA compliant.

What happens to patient data in AI systems?

In compliant systems, data is encrypted, stored securely, and only accessed by authorized personnel. Patients retain rights to view, correct, or delete their information.

Despite growing adoption, mental health apps show only 3.3% user retention after 30 days, highlighting the importance of choosing user-friendly, compliant solutions.

For more technical details, read AI clinical notes vs Human clinical notes: Which is better?.

Conclusion

Privacy-compliant AI for mental health documentation is not just possible—it's essential for modern practices. With 970 million people worldwide living with mental disorders, therapists need tools that help them provide better care while protecting sensitive information. The right AI platform combines accuracy, security, and ease of use to support both clinicians and patients.

Ready to see how HealOS can transform your practice while keeping patient data secure? Book a demo today and discover why leading mental health professionals choose our privacy-first approach to AI documentation.

.png)